Mark Zuckerberg’s recent announcements about sweeping changes at Meta have stirred up some strong reactions. From moving community management teams to Texas to abandoning fact-checking as we know it, the updates seem to signal a seismic shift in the company’s approach to content regulation and platform governance. As someone with a journalism background, I can’t help but dissect the implications of these changes—and they’re far from reassuring.

Free Speech vs. Hate Speech: The Slippery Slope

Zuckerberg’s emphasis on embracing “free speech” while shedding the constraints of censorship may sound noble on the surface, but the nuances are critical. Free speech, as protected by the First Amendment, doesn’t extend to defamation, fraud, incitement to violence, or false advertising. Yet, Meta seems to be setting the stage for a platform with minimal guardrails.

This shift feels less like championing freedom and more like opening the floodgates to unchecked misinformation. With platforms like Facebook and Instagram serving as primary news sources for 54% of Americans, according to Pew Research, the absence of reliable fact-checking is alarming. Instead of addressing bias within their fact-checking teams, Meta’s solution is to toss out the baby with the bathwater. The result? A potential “Wild West” of misinformation, where users are left to fend for themselves.

AI and Deepfakes: A Perfect Storm

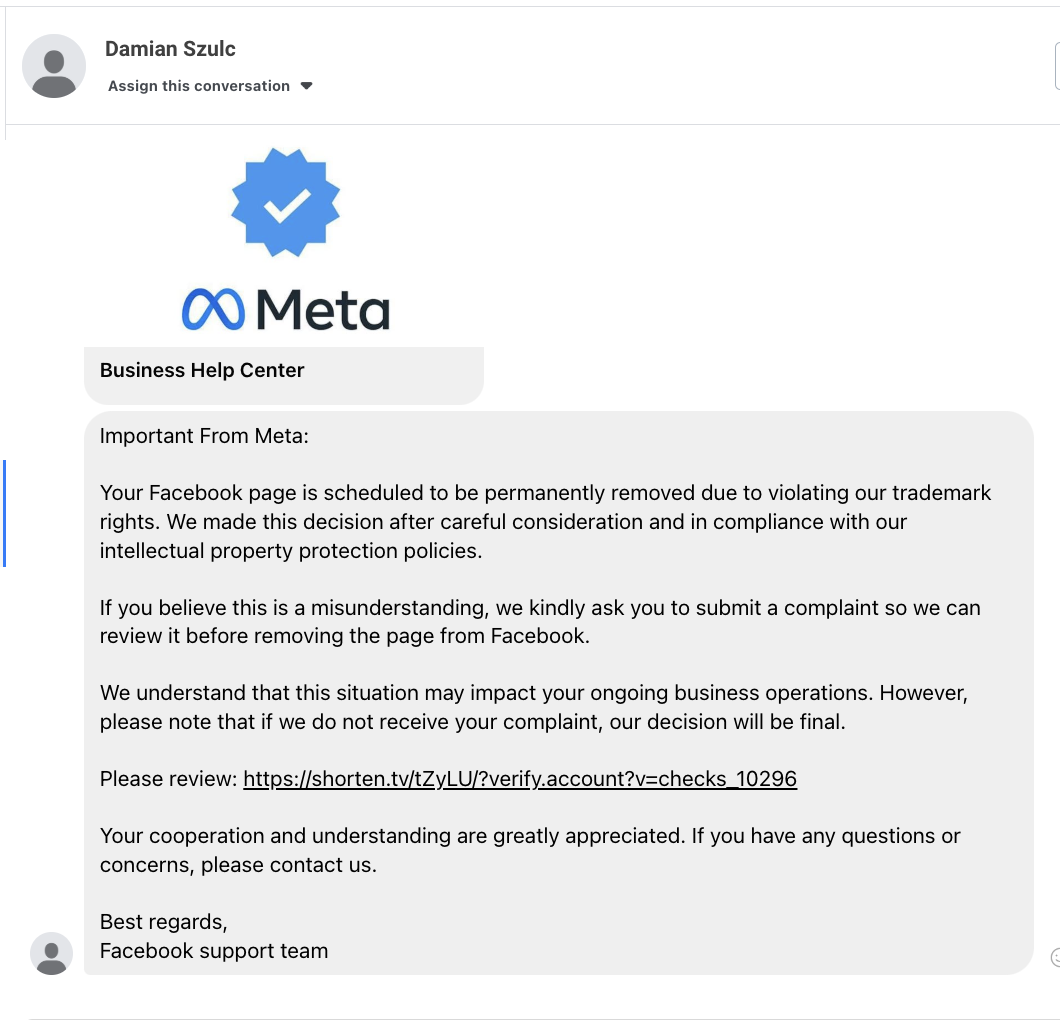

Adding to the chaos is the widespread adoption of AI and the rise of deepfakes. Already, I’ve seen individuals in my network duped by AI-generated images, unaware of their origins. With Meta rolling out the Community Notes feature (which we don’t know exactly how this will work yet), the premise is Meta will now rely on users to flag harmful or misleading content—the responsibility shifts away from the company and onto its community. How convenient. This approach might sound empowering, but in reality, it’s a half-baked solution that places an unnecessary (and unwanted) burden on everyday users to moderate the platform’s content.

The Good: Loosening the Reins on Content Creation

One silver lining is the potential easing of restrictions on certain industries, particularly those heavily regulated in the past. Companies and creators who’ve battled unwarranted content flagging might find some relief, as the removal of over-enforcement could lead to fewer mistakes and less unnecessary removal of legitimate content.

Another positive is the reintroduction of politics and civic content, which will now have equal visibility alongside other topics on the platform. This could create opportunities for more robust conversations and ensure important civic discussions aren’t buried by the algorithm—though, as with all things Meta, this comes with its own risks.

Even with these changes, we can’t ignore the potential for the platform’s algorithms to become more politically aligned with the current administration’s agenda. The inner workings of these systems remain a black box to users, leaving us in the dark about how decisions are made and what content is prioritized. This lack of transparency means that, while the changes might appear more balanced on the surface, we’ll never fully know the extent of the bias baked into Meta’s operations.

Additionally, if Meta can’t address the rampant bot activity—such as the scams plaguing business pages for years—can it truly handle the complexities of managing civic and political discourse without veering into chaos? These are the questions that remain unanswered as we enter this “new era.”

The Move to Texas: Solving Bias or Succumbing to Pressure?

Zuckerberg’s decision to relocate Trust and Safety and content moderation teams from California to Texas feels like a “big hat, no cattle” moment—a flashy gesture with little substance behind it. Framing this move as a way to reduce bias in content moderation doesn’t address the real problem: bias is built into the algorithms and policies Meta controls, not the location of its teams.

Sure, Texas might symbolize independence and a fresh start, but this isn’t a cowboy riding in to save the day—it’s more like changing the barn’s location while ignoring the wild stampede of misinformation galloping through the platform. The real work lies in addressing systemic issues, not just shifting zip codes for optics.

By making this move, Meta is playing into the pressures of the “new era” rather than tackling the structural problems that keep its platforms vulnerable to manipulation. This change might look good on paper, but it does little to instill trust or provide real solutions for users.

Censorship vs. Regulation Globally: A False Dichotomy

One of the most frustrating aspects of Zuckerberg’s remarks is his conflation of censorship and regulation. There’s a world of difference between silencing voices and setting reasonable standards to ensure content integrity. Pushing back against global censorship is one thing, but completely abandoning the idea of thoughtful regulation is reckless. I said what I said.

How Will This Affect You?

Unless you’re in a highly regulated industry that’s been repeatedly flagged or silenced by Facebook in the past, these changes might not have a significant impact on you as a content creator. But as a consumer? That’s another story.

With fewer guardrails in place, the platform is likely to see a spike in misinformation, harmful content, and polarizing discussions. If you rely on Facebook or Instagram for news, these changes could make it harder to separate fact from fiction. For everyday users, the shift from fact-checkers to Community Notes means that the quality of information you see will depend heavily on who’s flagging content—and their motivations.

Content creators in less regulated industries might enjoy fewer restrictions, but this also comes with the increased responsibility of ensuring their content remains authentic and credible. The era of “post and pray” may be over, but the era of “post and get scrutinized by your peers” has just begun. Can’t wait! (<< sarcasm)

To get a clearer picture of what’s ahead for Facebook, Instagram, and Threads, listen to Mosseri’s statement—it’s more professional and seemingly less biased than Zuckerberg’s, though it doesn’t erase the bias behind these decisions. Also, is it just me, or does Mosseri look a bit worried about this whole approach? Blink twice if you need help, sir

Final Thoughts

At the heart of these updates is a troubling realization: Zuckerberg’s vision for Meta appears to be driven by profit and political expediency rather than the well-being of its users or the integrity of its platforms. The repeated mentions of the “new era” and the current administration reveal a company more interested in aligning with power dynamics than safeguarding its community.

While some creators may celebrate the loosening of restrictions, the broader implications—misinformation, unchecked hate speech, and the erosion of trust—are deeply concerning. The question isn’t whether these changes will create a new Meta era but whether they’ll create a better one. Only time will tell, but from where I stand, the future looks less like innovation and more like chaos.

What are your thoughts on Meta’s latest updates? Do you see them as a step forward or a step too far?